Denise was invited to be the first keynote speaker of Conversion Hotel 2021. Her story is about the journey Team Experimentation of bol.com made to make it ridiculously easy to experiment for all their bol.com colleagues. You can also read the announcement below.

Soon a video of the talk will become available. The article below reflects what Denise told.

Article: Experimentation at bol.com

I love it to be at Conversion Hotel. Because I don’t have to do a general introduction about why experimentation is important. And I don’t need to tell you about the what, because the whole audience is packed with experienced experimenters. I love it because I can talk about the things we are currently doing at bol.com. More regarding the process, the how. And what we currently are doing led to the Team Experimentation Culture Award for Team Experimentation of bol.com. So if you also want to win this great prize, you better read on.

In 2016 we started with a group of enthusiasts with experimentation at bol.com and investigated the possibilities. At bol.com we didn’t have a tool for experimenting like VWO or Optimizely. Based on the wishes of the product owner some functionalities had the possibility to experiment, most didn’t. We had a bucketing system, which was created by an intern.

We called ourselves the CRO-team. It were mainly business analysts, but also a web analyst. And sometimes a developer joined. We ran some experiments. And apparently someone noticed, because I was invited to speak at DiDo #23 of Online Dialogue about the pitfalls of CRO at bol.com.

It was in March 2017 that I showed this slide with numbers on it. Each number meant something regarding the history of bol.com. The number of experiments we did at bol.com on a yearly basis was also in between. I revealed that it were 25, and to be honest I overrated it. I was so embarrassed that bol.com (the biggest Dutch online retailer) hardly ever experimented.

Back then we thought we know best what our customers want and therefore all our great ideas were implemented. It was mostly about innovation and less about optimization.

Thanks to the chemistry of the group of enthusiasts, we continued our hobby. The position of CRO specialist is unknown at bol.com. It was just a side project besides our normal day job. We had a lot of fun, and it was also about who could come up with the experiment with the biggest gain.

In 2018, it was our goal to run 100 experiments. Based on the scarce possibilities we had. For example, adjusting text, varying the order of elements on a page, and adding a logo or a different colour here and there. Executing the experiments was the ‘practice’ part of our initiative. One of the team members was an outgoing person and very good in market the results of those experiments.

I was more modest and more thinking about the long term, and the opportunities for bol.com. That covers the ‘preach’ part of our CRO initiatives. Preaching was about to tell teams about how you could experiment, and we also bought a tool Effective Experiments to store our ideas and learnings and to give more structure to the process (which I learned during the course of Peep Laja).

We did not achieve the target number of 100 experiments in 2018, but we did achieve an additional yearly revenue of 100 million euros with those experiments. And if only a group of enthusiasts could make this happen by shooting with hail. Then surely we can achieve more with a dedicated team. And so it happened. In 2019, Team Experimentation was born.

In 2019 our goal was to help hundreds of people at bol.com to run experiments more effectively. And the first step to make that easy was to combine all scattered tools and solutions into one platform.

We created the XPMT platform. We started off with a team of 5; 2 externals, a very experienced developer at bol.com who knows the landscape very well, a young professional and a data scientist.

It is not just about creating a platform. It is also about changing a mindset. So besides developing the platform, we are also a group of consultants within the company. Anybody within the company with a question regarding experimentation can reach out to us.

Part of the platform is the administration part. First, there was only 1 textbox in which you could enter a hypothesis. But it turned out that the quality of the entered hypotheses could be improved. We chopped it up in separate questions, and those answers together are the hypothesis. (Inspired by Experiment Hypothesis Creator, Conversion’s Hypothesis Framework and the Experimentation Kit by A/B Sensei). This turned out to be helpful, but still you can generate bullshit with it.

That also brings me to a challenge I encounter. What is my responsibility regarding the quality of all the experiments? Perhaps in time my role will change more to a police officer overseeing quality. Or a librarian who knows what has been experimented with. Or perhaps a combination of both.

So first, we phased out Effective Experiments. It was an easy gain. The remaining scattered tools we kept in place. Teams which already started experimenting could continue doing that.

In the meantime, we started with more complex parts of our landscape. For instance, it wasn’t possible yet to experiment with product content (because of cache). By facilitating that, we revealed a lot of new potential. And if we could proof that we were able to nail that, we could do anything. What was seen as an impregnable fortress we could get experimenting. Admittedly, first with a hack, which has since been replaced by a more sustainable solution. But this is how we got things moving. And also the search team was embracing the functionalities of our platform. The search team is a team full of data lover. So that is an easy target audience.

In 2019 we didn’t have a lot more experiments than in 2018. But the positive thing was that more teams were involved. In 2018 most experiments were executed by the CRO-team. In 2019 it was done by multiple teams.

In 2019 Team Experimentation started to work with OKRs. And we didn’t completely stick to the rules, so we came up with 3 objectives.

- Master the Data

Have a good understanding of the data. To really understand what all the metrics mean and how they are computed - Create an Experimentation Heaven

Make it ridiculously easy for every bol.com’er to run experiments. This is mainly about technical solutions. - Spread the (experimentation) virus

Make others enthusiastic about the opportunities of experimentation. This is also about changing the culture of the company in becoming more hypothesis-driven.

Regarding the bol.com landscape, you can imagine that there are a lot of different teams and services involved to get everything done. Halfway 2020 it is decided that bol.com will be organized as a product organization. And to be honest, it is not implemented/executed ideally yet, but it already gives teams more focus and make them also more aware of their responsibilities in proving their added value. Experimentation gives colleagues the opportunity to become smarter. They learn what makes their product better and what not.

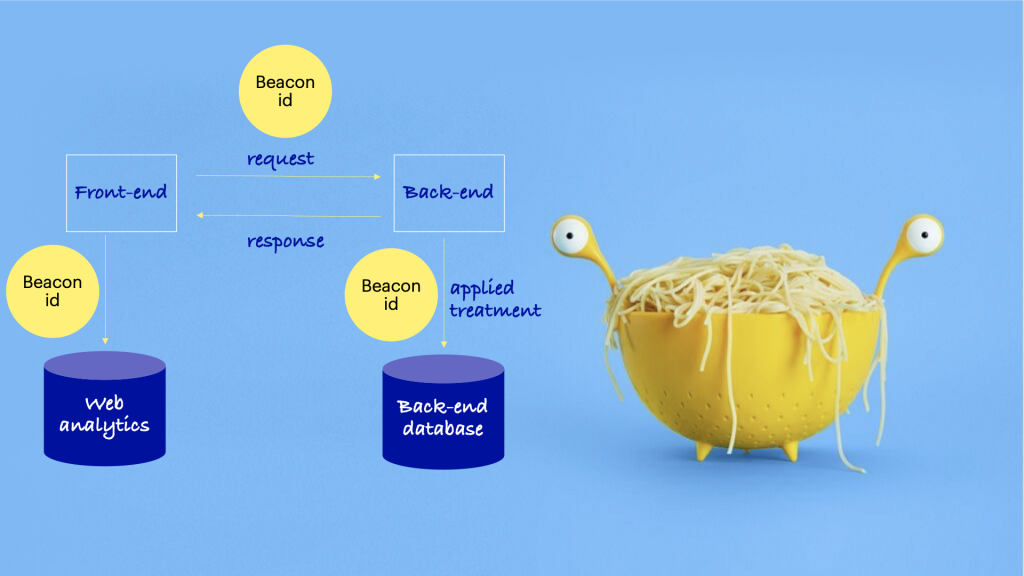

As Team Experimentation we created the BeaconID. When the front-end needs input from the back-end (for instance the delivery time of a product), then the BeaconID is sent along in the request. The BeaconID is also stored in the behaviour measurement.

The back-end provides the front-end with the right delivery time. The back-end can decide for themselves whether this request is part of an experiment. And stores the applied treatment in a database, along with the BeaconID. Based on the BeaconID we can combine the applied treatment with the actual behaviour and based on that we learn whether the change had impact on customer behaviour or not.

If you want to know more about the technical details of the BeaconID, please read the article ‘Empowering autonomous experimentation in a distributed ecosystem‘ by my colleague Fabio Ricchiuti

The effect of those types of making things easier on the technical side was that even more teams were able tor run experiments autonomously and more (back-end) teams got involved. For instance a logistics team for optimizing picking routes in the warehouses but also a financial team for offering the right payment methods in the right order for the customer.

In 2021 we broadened our scope even more.

Experimenting is not just about running A/B-tests. It is about a hypothesis driven mindset. So we are challenging the organization in a more diverse way. If you are not able to do an A/B-test, you might have the possibility to do a before/after analysis. We also allow you to store that type of insights in our platform. And currently we make it also possible to store for example market research and UX research. We want to become a learning center.

And that brings me to another future image of my role; should I become the author of all meta analyses, or should I just encourage the experts within their domain to create their meta analyses.

While we are broadening our scope we are also looking for more partners within the organization.

For instance the group of people who are responsible for rolling out the product organization. They created a model ‘Product Innovation Power’. The Product Innovation Power model is a self-assessment tool to measure the innovation power of your product and identify actions for improvement. The PIP consists of 3 dimensions, each with 5 subdimensions. These dimensions span your products ability to deliver value for the customer (Value Focus), the quality of your technology (Tech Health) and the extent of which your team is organized for success, while having fun (Team Health). PIP scores where a team stands regarding for instance Data & Experimentation and give you tips about possible improvements.

But we also explained more hardcore topics, like how does the bucketing actually works. What will happen to the bucketing if you change the distribution, what if you restart your experiment. Also how to write a good hypothesis and a detailed explanation about what significance actually means. And what to do if your experiment hasn’t a significant outcome. As a team experimentation, we really exude our expertise without becoming conceited. At least, we try to.

The additional benefit is that the departments that have these additional metrics as their KPI, they start promoting experimentation to validate the impact to their processes and targets of the applied changes.

What I am also aiming for is to add information about the performance (pagespeed) of the treatments and to make the regular release process part of our bucketing system.

As Team Experimentation we came more and more enthusiastic about our impact and opportunities. Our enthusiasm caused that we subscribed for the Team Experimentation Culture Awards.

So this is our 1000 character entry for participation:

The moment the verdict was there. We were all sitting together having a bbq in the garden of the engineering manager. The internet connection failed by the moment of the announcement but by all the texts I received we knew that we won.

I summarized the results of October in this slide below. I posted it on Workplace. That is part of our Spreading the virus; post each quarter at least 25 messages on our internal communication platform about experimentation. This can be about new functionalities or interesting articles.

We don’t communicate about the results of the experiments because we don’t own these experiments. The teams themselves communicate about the results. They are the heroes. And that we are doing great experiments at bol.com.

And that we do cool experiments is shown by the fact that an experiment around delivery times won a DDMA CRO Award in 2021.

To conclude also a bit about what is ahead of us.

It is our ambition to give also the partners of bol.com the opportunity to experiment. So that they can come up with hypothesis but also be part of experiments, for instance if they have to add additional content.